Photo by Google DeepMind on Pexels

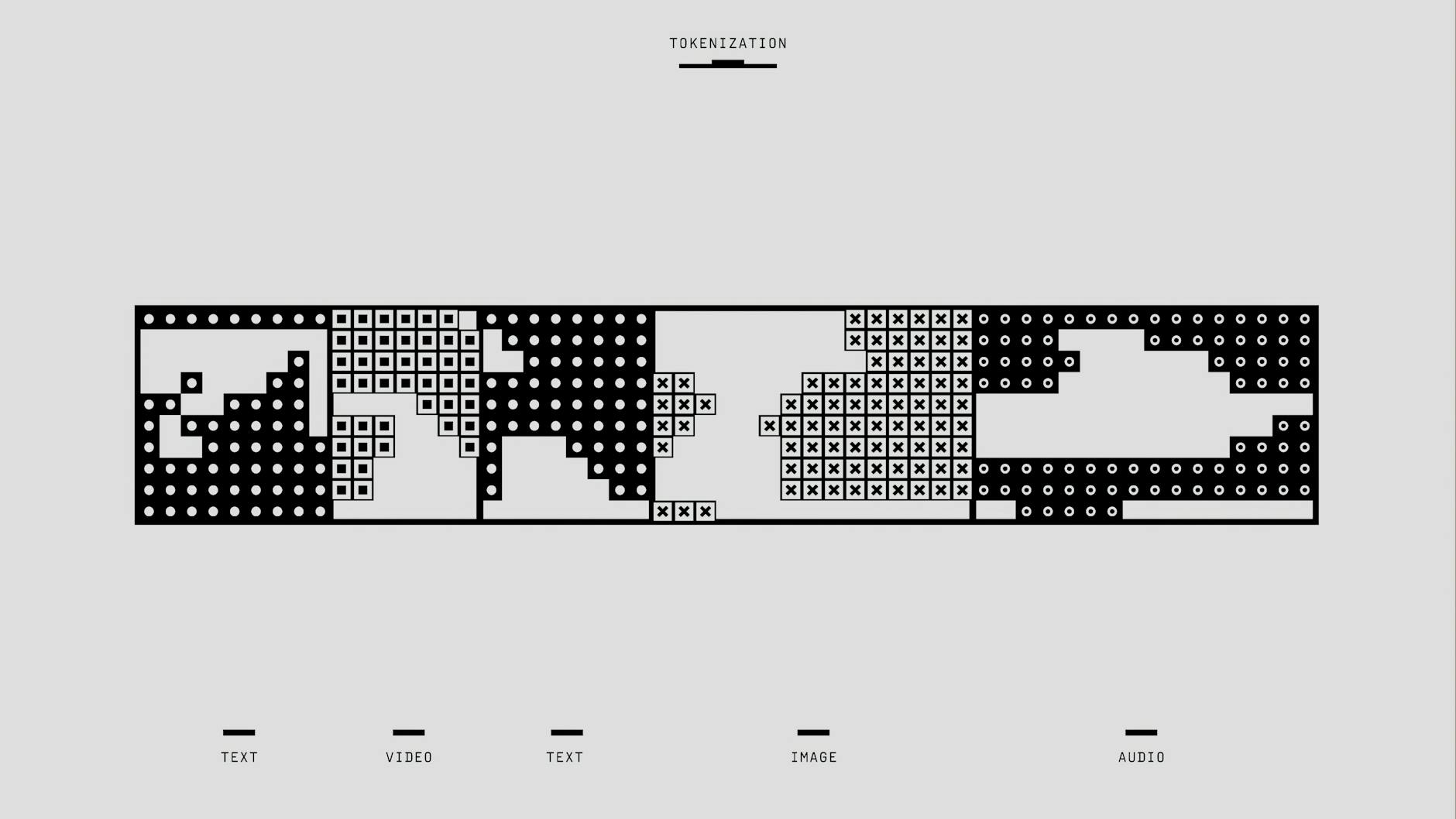

Deploying large language models (LLMs) like Claude and GPT involves more than just initial training costs. A new analysis reveals significant hidden expenses tied to tokenization, potentially making Claude models 20-30% more expensive to operate in enterprise environments than their GPT counterparts. The study focuses on the financial impact of varying token counts generated by different models for the same input text. While the existence of different tokenizers is well-known, this research quantifies the impact of these variations on overall operational costs, highlighting a crucial consideration for businesses choosing between different LLM architectures.