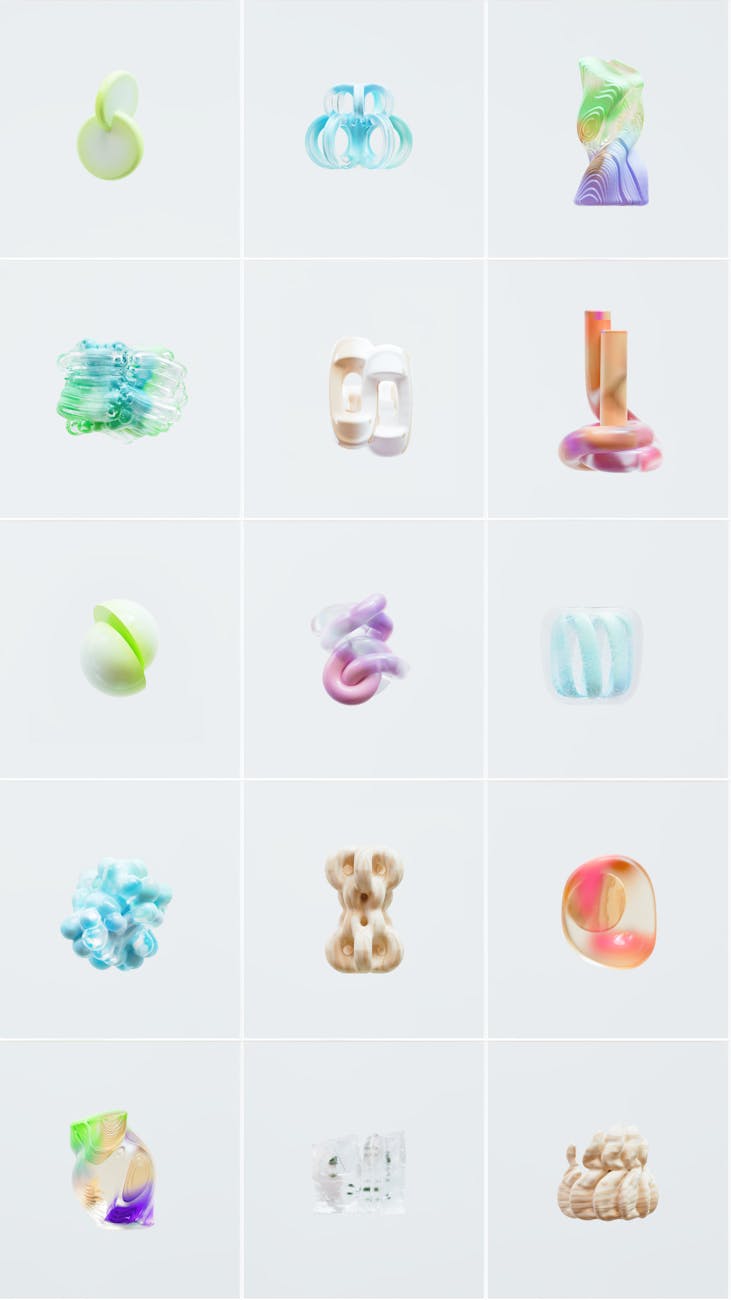

Photo by Google DeepMind on Pexels

A new study reveals that multilingual artificial intelligence models are not only reflecting existing biases but also actively spreading them across different cultures. Researchers, including prominent AI ethics expert Margaret Mitchell from Hugging Face, have developed a dataset specifically designed to identify and measure bias in these systems. The findings demonstrate how AI, trained on diverse language data, can inadvertently amplify harmful stereotypes and disseminate them into new cultural contexts, raising significant ethical concerns about the global impact of AI-driven technologies.