Photo by cottonbro studio on Pexels

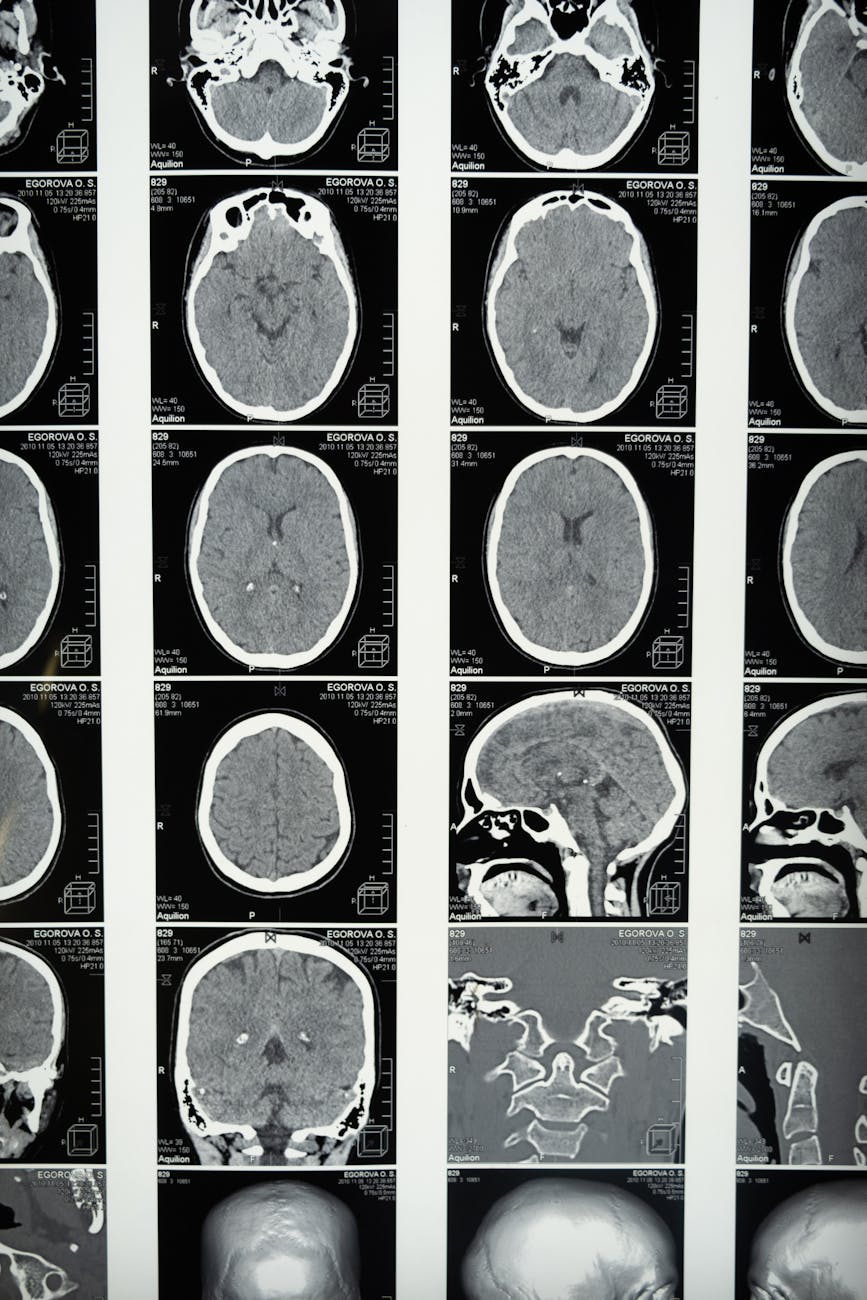

Google’s Articulate Medical Intelligence Explorer (AMIE), an AI designed for medical diagnosis, has received a significant upgrade: visual understanding. AMIE can now analyze medical images like skin rashes and electrocardiograms (ECGs), expanding its diagnostic capabilities beyond text-based consultations. This enhancement leverages the Gemini 2.0 Flash model and a ‘state-aware reasoning framework,’ allowing AMIE to adapt its responses based on gathered information, mimicking the approach of a human doctor.

The AI was trained using realistic patient simulations incorporating medical images from resources such as the PTB-XL ECG database and the SCIN dermatology image set. In tests mimicking medical student evaluations, AMIE interacted with actors portraying patients who uploaded images. The results showed that AMIE often surpassed human primary care physicians in visual data interpretation and diagnostic accuracy. Furthermore, actors sometimes rated the AI as more empathetic and trustworthy.

Google is collaborating with Beth Israel Deaconess Medical Center to evaluate AMIE’s performance in actual clinical environments. The company emphasizes the study’s limitations, acknowledging the inherent differences between simulated scenarios and the complexities of real-world healthcare. Future development will focus on enabling AMIE to process real-time video and audio interactions.

Stay informed about the latest advancements in AI at the upcoming AI & Big Data Expo in Amsterdam, California, and London.