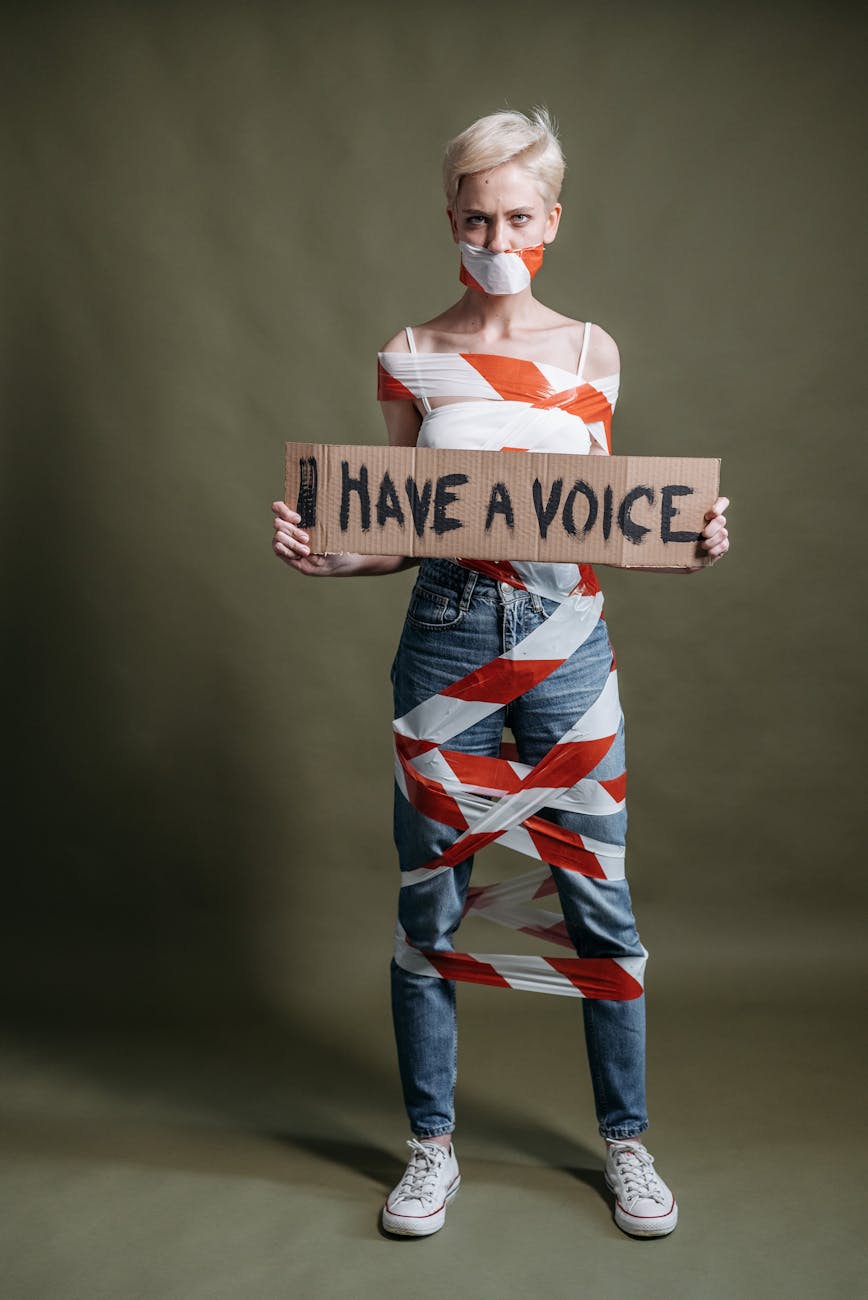

Photo by Pavel Danilyuk on Pexels

New research, highlighted by a recent online post, investigates Large Language Models (LLMs) under a ‘zero temperature’ setting. This configuration removes the inherent randomness in LLM output, forcing deterministic predictions. The study likely analyzes the consequences of this enforced determinism on factors such as output quality, creative potential, and predictability, offering valuable insights into the core mechanisms of LLMs. The original submission can be found online [link] along with associated discussion [comments].