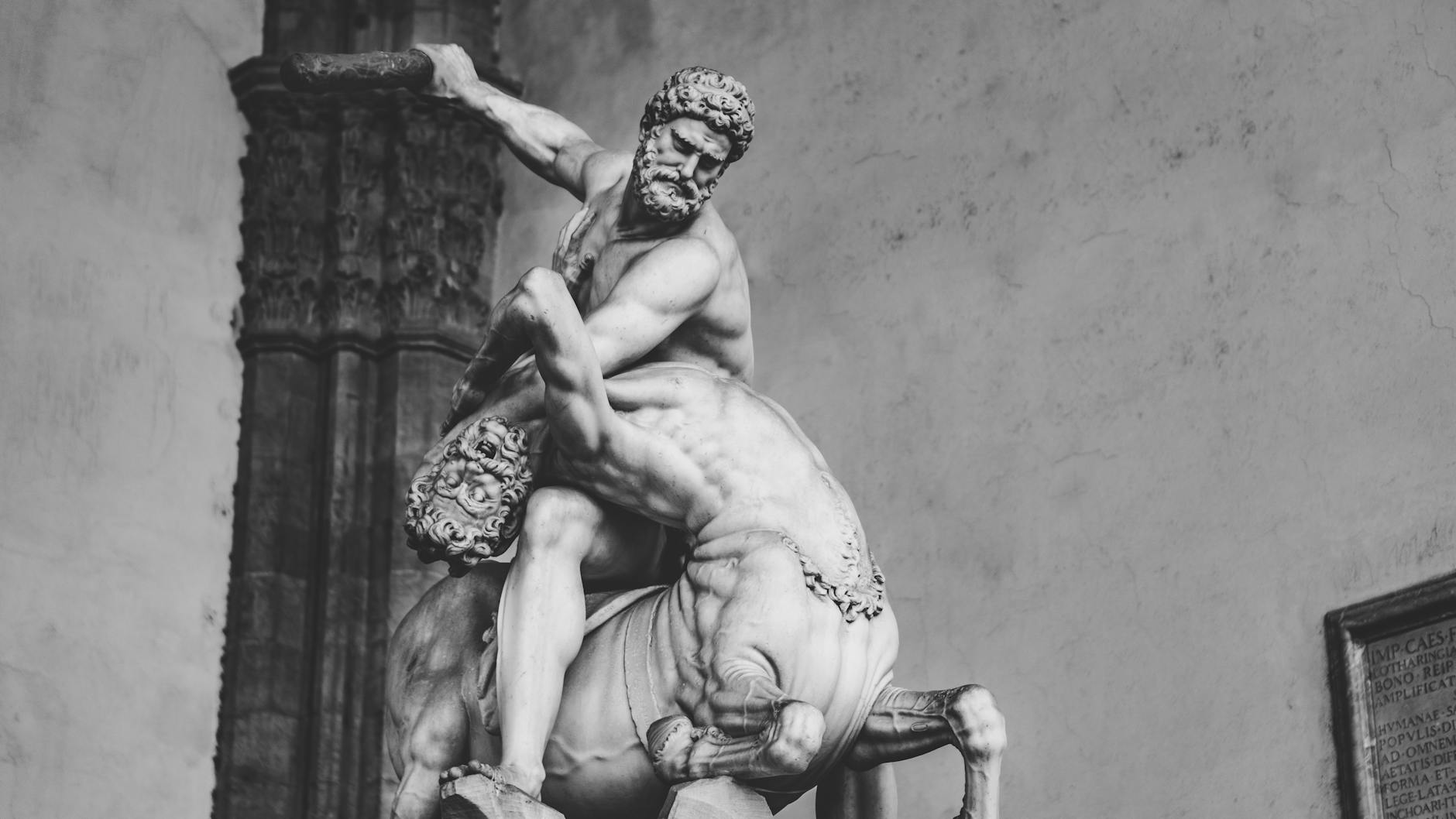

Photo by Chait Goli on Pexels

Artificial intelligence is increasingly being employed as a tool to probe the intricacies of human cognition. Researchers are leveraging the predictive capabilities of AI models, especially large language models (LLMs), to gain insights into the mechanisms underlying thought and behavior, despite fundamental differences between AI architecture and the human brain.

A notable study published in *Nature* introduced Centaur, an LLM fine-tuned on data from 160 psychological experiments. Centaur’s performance in predicting human behavior surpassed that of conventional psychological models, suggesting its potential for formulating novel theories about the human mind. However, some experts caution that Centaur’s success might stem solely from its sheer size and complexity, rather than a genuine reflection of human cognitive processes. An alternative strategy involves utilizing smaller, more transparent neural networks. While offering less predictive power, these simpler models facilitate the generation of readily testable hypotheses about specific cognitive functions.

The ongoing challenge lies in balancing predictive accuracy with interpretability. As Marcelo Mattar from New York University emphasizes, capturing intricate behaviors demands larger, more complex networks, which paradoxically are more challenging to decipher. The ambition is to eventually reconcile predictive power with genuine understanding, paving the way for significant advancements in our comprehension of complex systems, ranging from the human brain to climate patterns.