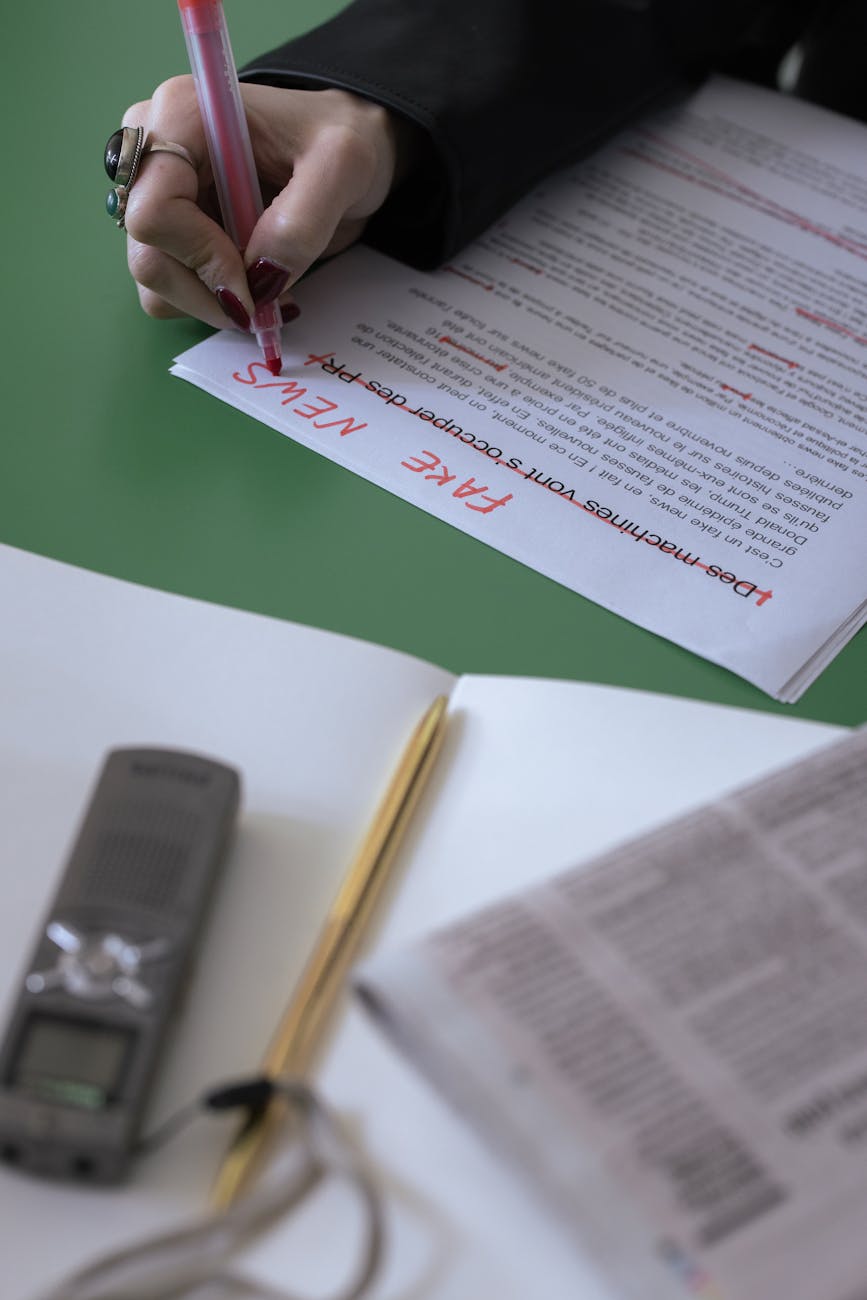

Photo by cottonbro studio on Pexels

A new study reveals a troubling trend: AI chatbots are increasingly omitting crucial medical disclaimers, even when responding to health-related inquiries. This absence of caution raises significant concerns about users mistaking AI-generated content for reliable medical advice.

Led by Sonali Sharma at Stanford University School of Medicine, the research (currently available on arXiv and awaiting peer review) indicates a sharp decline in disclaimers across leading AI models from companies like OpenAI, Anthropic, DeepSeek, Google, and xAI. While previously a considerable portion of these models cautioned against trusting their medical advice, now less than 1% include such warnings when addressing health questions or interpreting medical images.

Experts warn that the removal of disclaimers, coupled with the pervasive hype surrounding AI’s capabilities, could lead users to overestimate chatbots’ ability to provide accurate medical guidance. The study notes that the loss of disclaimers may have occurred as AI companies compete for users. Furthermore, reports of users on platforms like Reddit actively seeking ways to bypass existing disclaimers underscore the potential for misuse and the need for caution.

While some companies declined to comment directly, OpenAI referenced its terms of service, which state that AI outputs should not be used for diagnostic purposes and that users are ultimately responsible for their interpretation. MIT researcher Pat Pataranutaporn emphasizes that despite generating convincing and seemingly scientific responses, AI lacks genuine understanding, making it difficult to assess accuracy. The trend of disappearing disclaimers therefore poses a significant risk.

The study underscores the urgent need for AI providers to establish and maintain clear guidelines regarding the limitations of AI in healthcare, ensuring users understand the risks involved. Reddit users are also discussing the trend of AI-generated medical information, highlighting the broad societal awareness of this issue.