Photo by Google DeepMind on Pexels

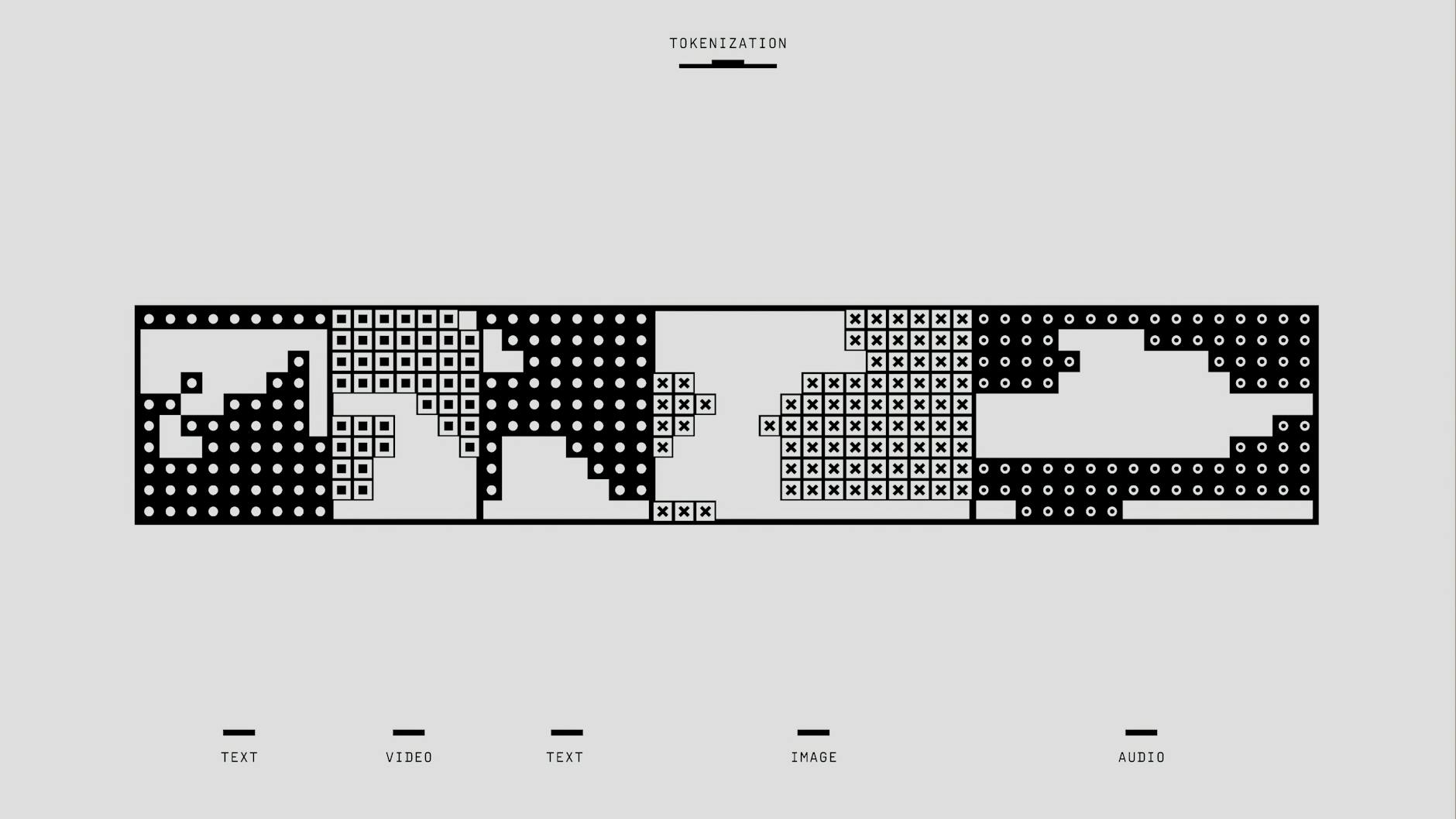

Groq’s tokenization process is facing scrutiny following reports of unexpectedly high token counts for simple queries. A user reported that the Groq/compound-mini model counted the input ‘hey’ as a staggering 507 tokens. This has ignited debate within the AI community about the efficiency and potential anomalies within Groq’s tokenization system. The original observation and subsequent discussion can be found on Reddit: https://old.reddit.com/r/artificial/comments/1obpcid/why_groq_why/