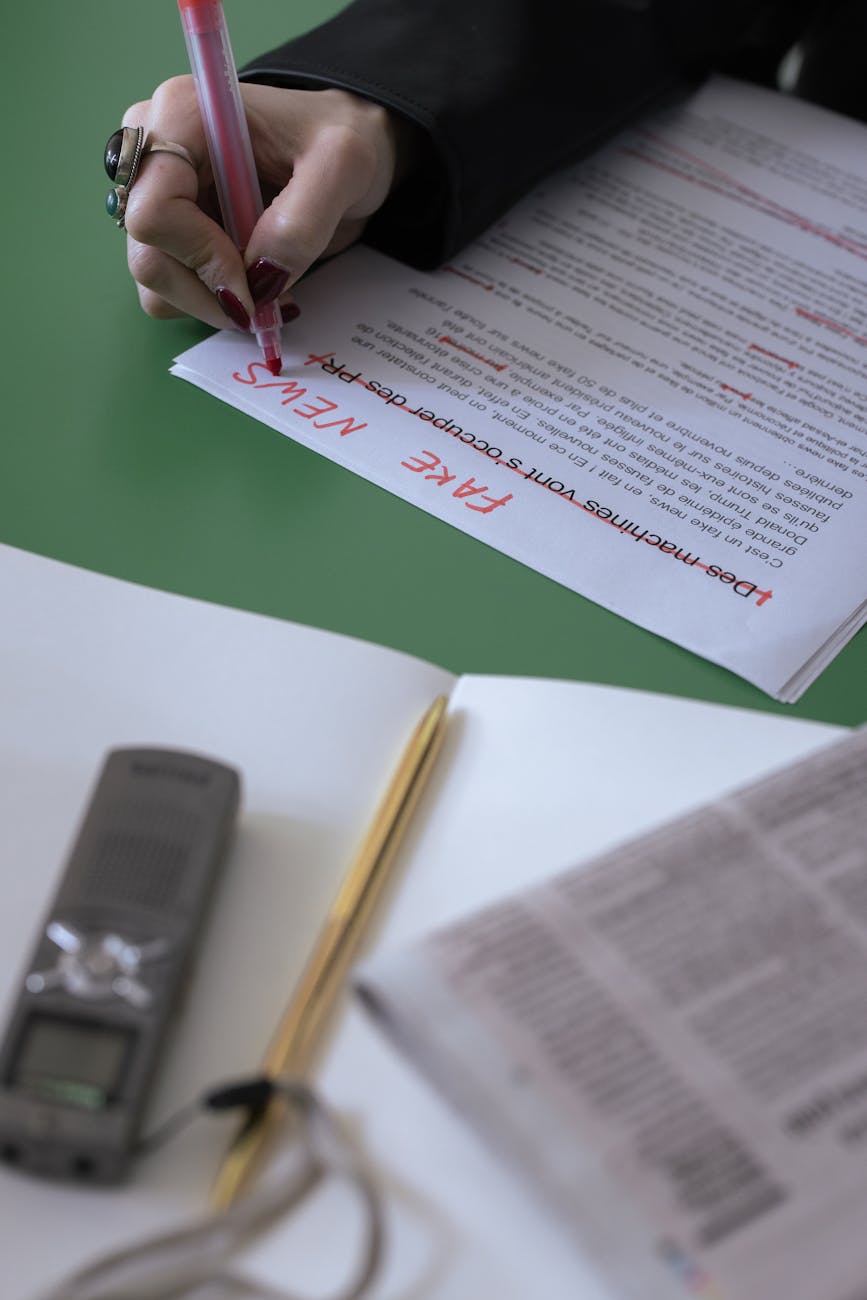

Photo by cottonbro studio on Pexels

The FBI’s release of low-resolution photos of a person of interest in the Charlie Kirk shooting case has prompted internet users to employ AI image enhancement tools. Experts are cautioning against this practice, highlighting the potential for AI to introduce inaccuracies and create misleading representations. Several AI-generated variations of the suspect’s image, produced using tools like X’s Grok and ChatGPT, have already surfaced online. Concerns arise from the fact that AI-powered upscaling infers details rather than revealing them, leading to potentially fabricated features. Previous instances have demonstrated the unreliability of AI image enhancements. The FBI’s initial post explicitly sought the public’s assistance in identifying the individual based on the original images, raising fears that these AI-altered versions could be mistakenly perceived as factual evidence. The original FBI request and images can be found here: [FBI Post Link Placeholder]